cocobot

Overview

Family caregivers encounter mental health concerns due to the stressful caregiving tasks. However, they don't have access to credible resources and they even feel guilty to spend time taking care of themselves.

My daugthers has severa asthma.I can't stop worring about her and always feel stressed.

My team and I are building upon existing research Dr.Yuwen has done. Her research identified that there is an opportunity to provide dedicated self-driven tools to reduce stress for family caregivers, and this can have a long term impact on improving their health care outcomes.

Approaches

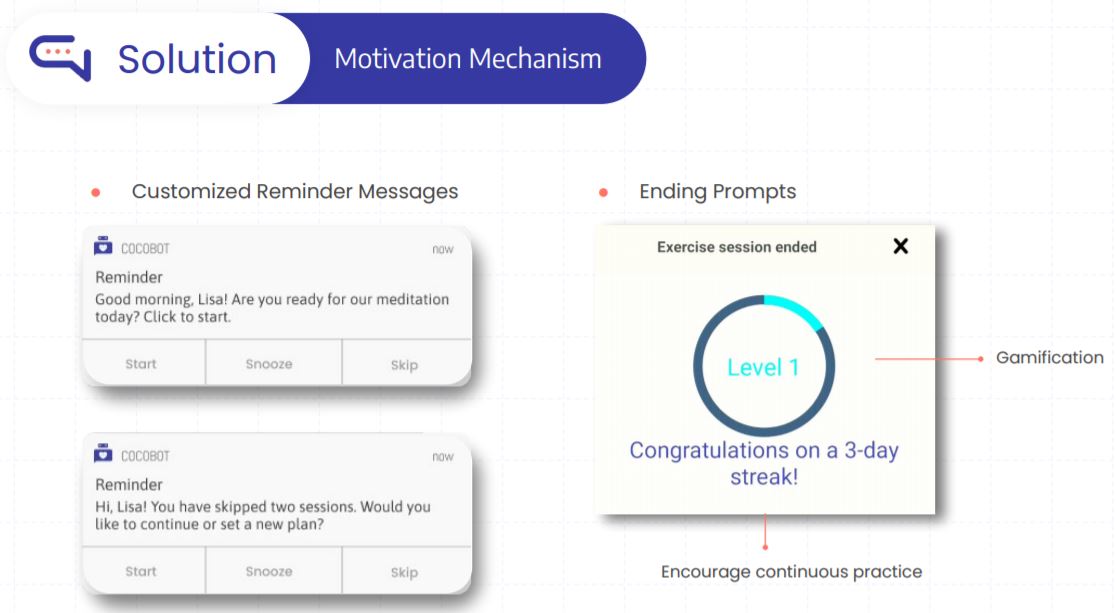

Based on Dr.Yuwen's academic research and our extensive first-hand interviews with users. We decided to deal with the problems from two approaches:keep the users engaged; keep putting fresh tailored content in front of users.

Our Solution

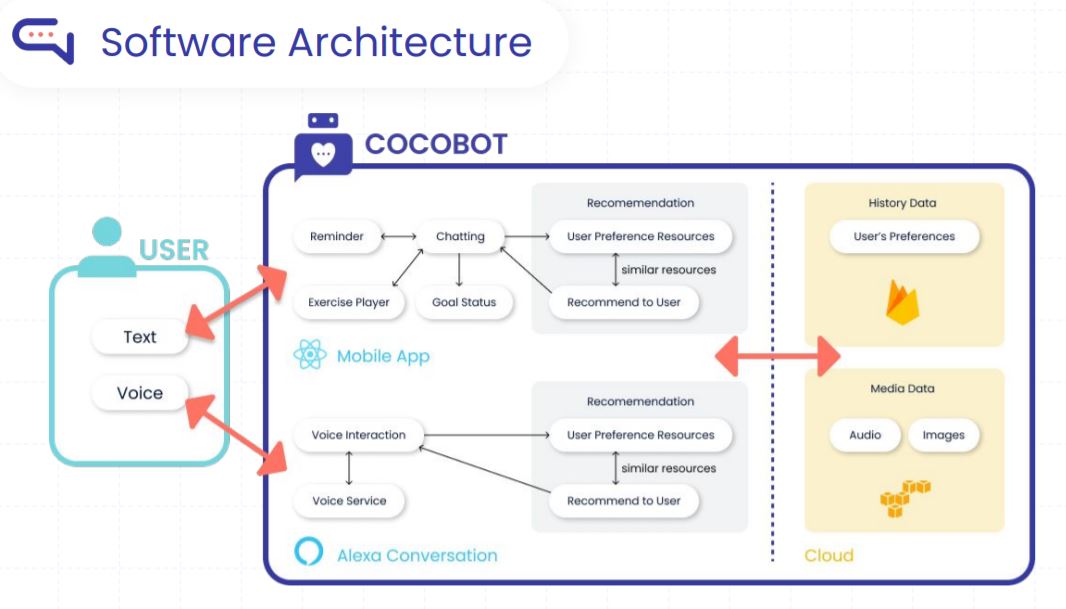

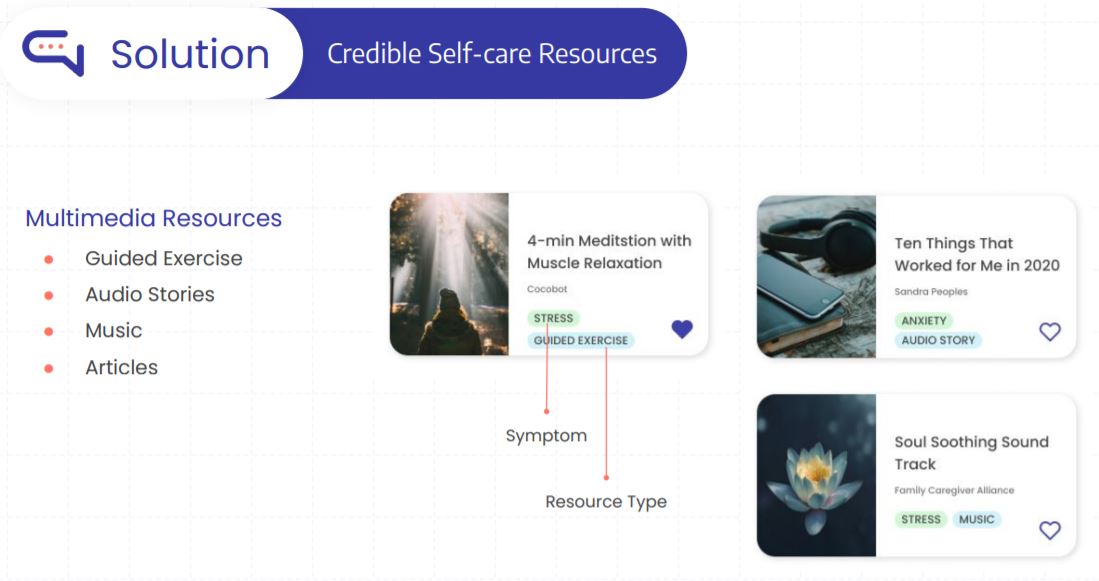

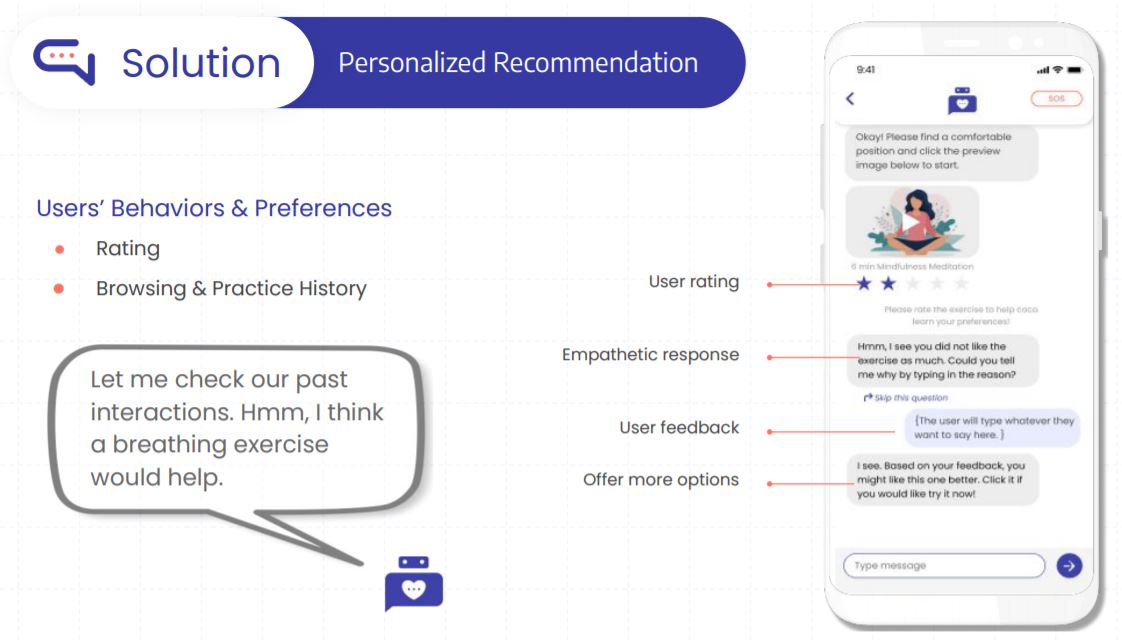

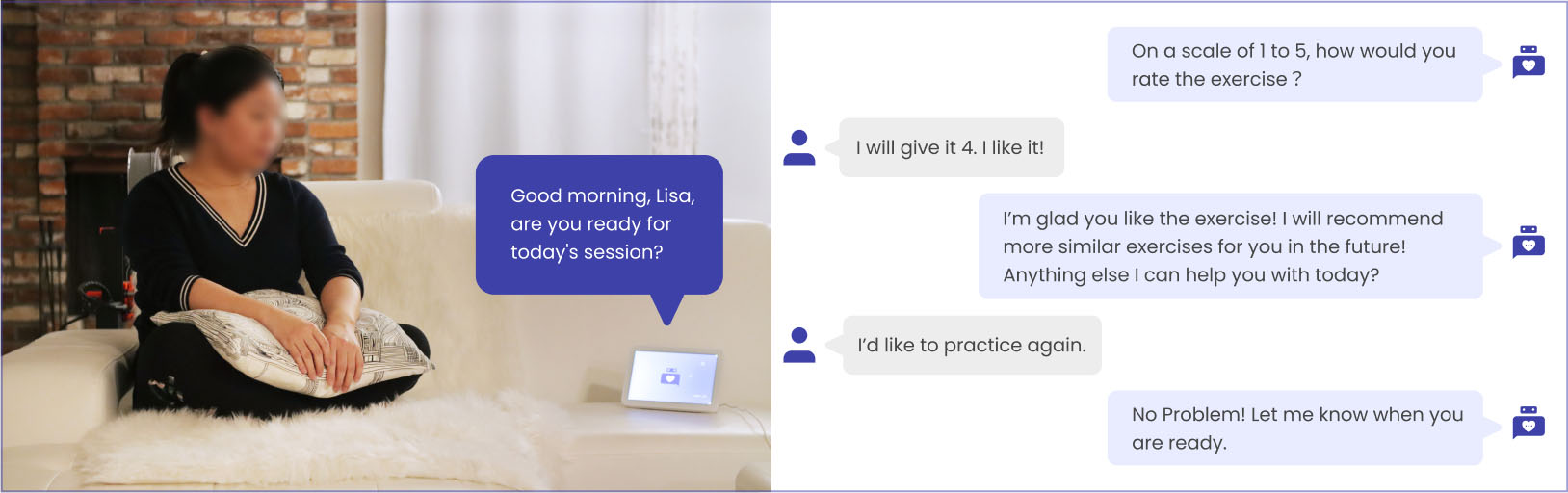

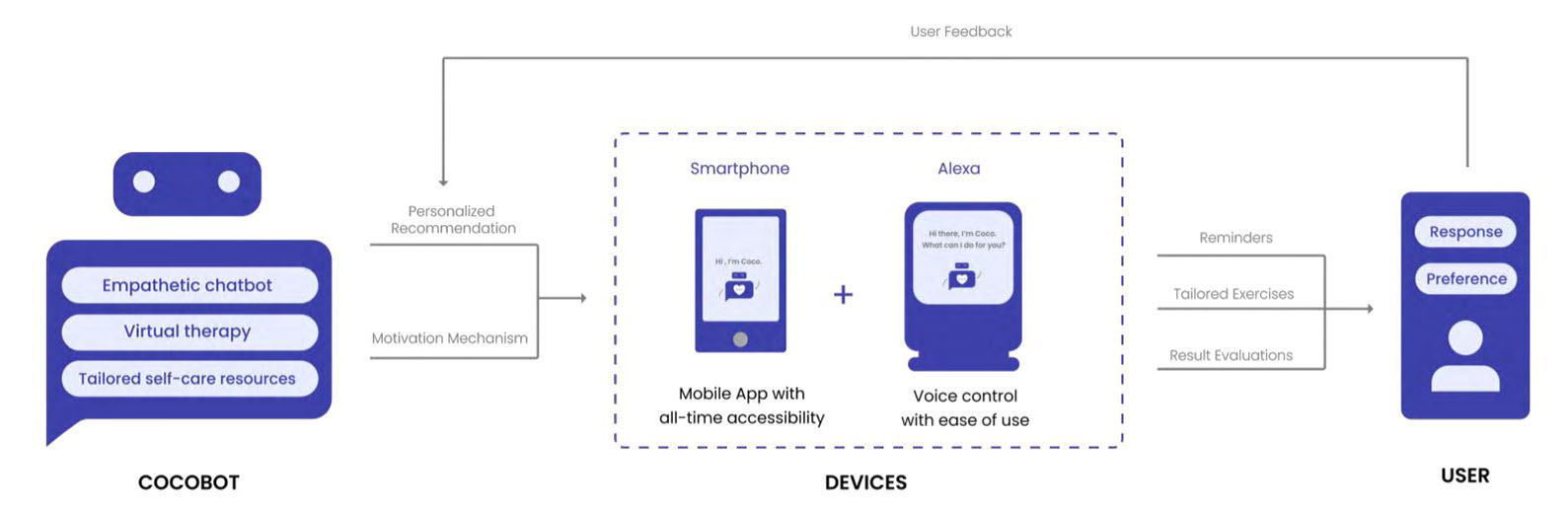

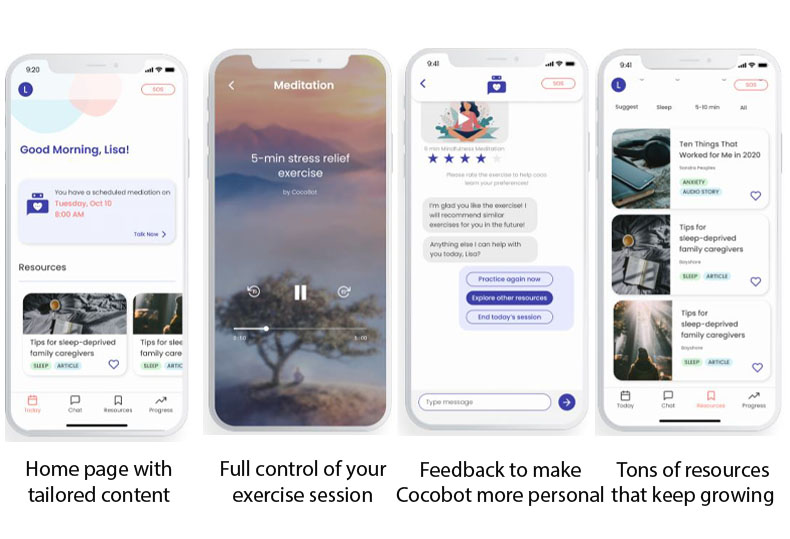

Building on the existing Coco app that guides users to identify problems and set up self-care goals through text conversations, our team added tailored content suggestions and voice interactions to help users achieve their goals. We designed mechanisms for Cocobot to learn users' behaviors over time by collecting users' feedback for each session, track users’ practice history, and monitor users' emotional changes. With customized experiences and flexible interactions, we encourage users to stay engaged and form long-term selfcare habits.

What I do

Design

After multi-turn investigation and research, our team decides to focus on voice interaction direction. In order to quickly verify our thought, our team accept my suggestion and let me design one mock-up voice interaction version for the early-stage user evaluation. Considering Adobe XD voice interaction features which compatible with Alexa, I designed our first version prototype.

Software Development

Based on the result of mock-up version testing , I developed our first cocobot alexa skill version via Alexa Skill Conversation (Beta). It uses AWS lambda functions to connect the dialogue model, and stored self-care resource data in AWS S3. In the model training, I use several dialogues as example data in the scenario and set the coco API functions to annotate the dialogue to capture potential conversation flows. Here is a system diagram, which references from Alexa skill Official Doc. Skills Repos:

After another round of user testing, we found that voice interface alone is not enough for users to understand the product. Users tend to reply more on the visual components to get a full picture of the product and then use voice interfaces as a more efficient way to call out certain functions. Thus, besides counting Alexa skill development, I am following the previous design language from HCDE to develop one mini mobile app version. App Repos:

prototype and features